How to Create Azure Standard Load Balancer with Backend Pools in Terraform

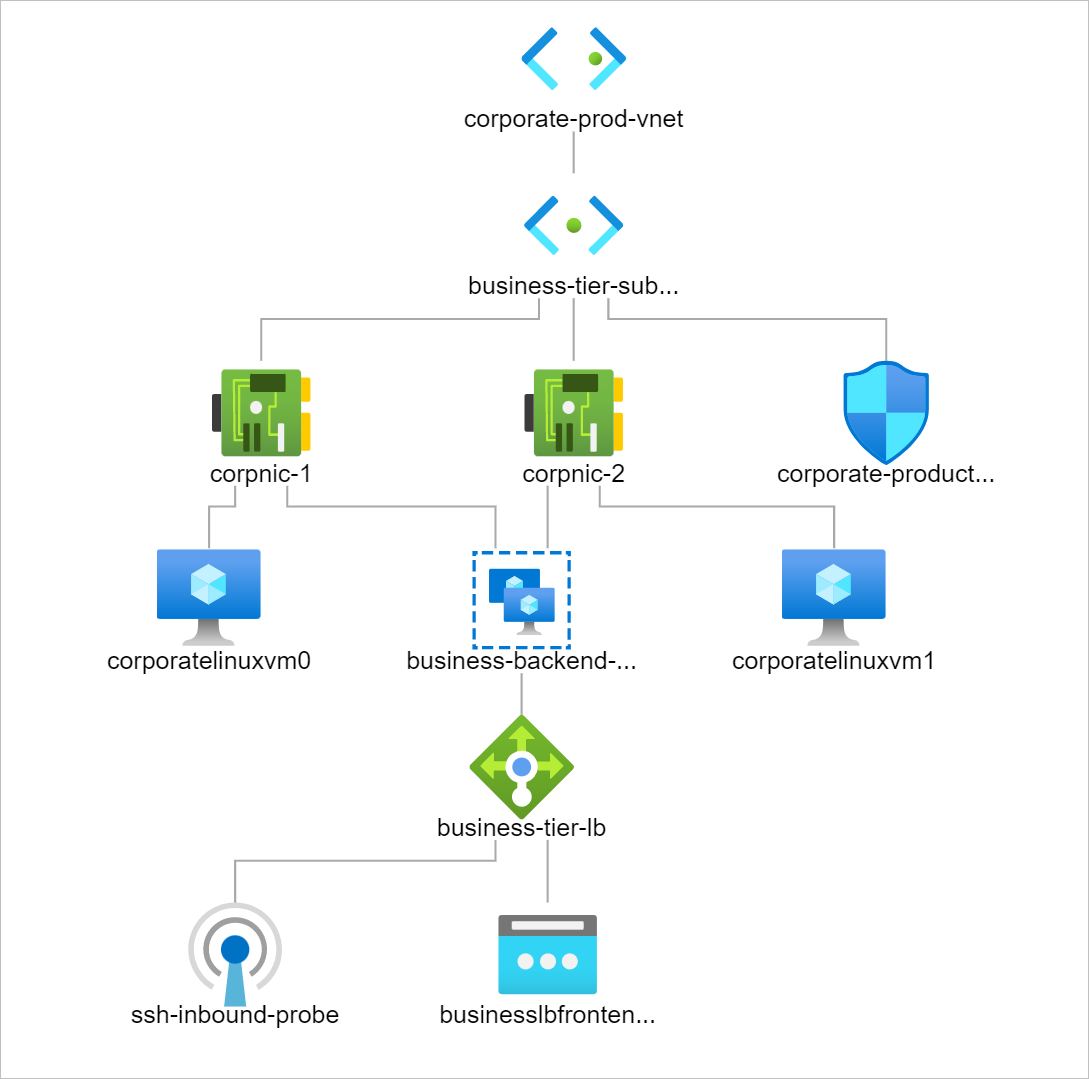

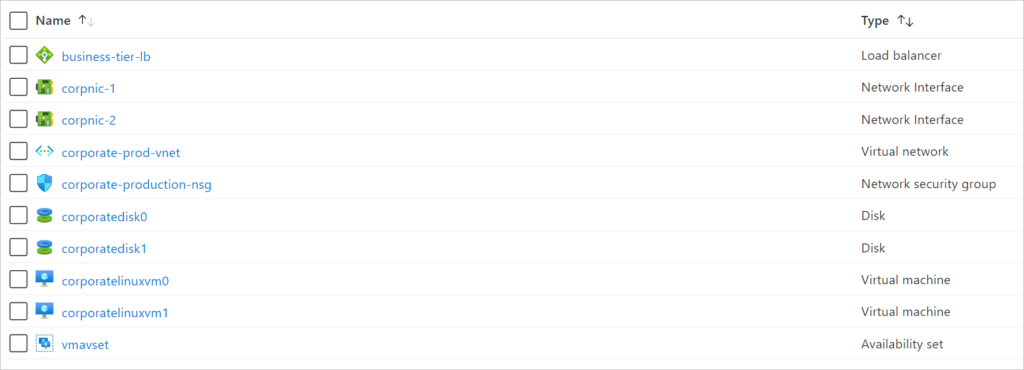

Below is a list of parts which constitutes this build.

- Resource Group

- Virtual Machines

- Network Interfaces

- Standard Loadbalancer

- Availability Sets

Open your IDE and create the following Terraform files;

providers.tf

network.tf

loadbalancer.tf

virtualmachines.tf

git clone https://github.com/expertcloudconsultant/createazureloadbalancer.git

#Create the providers providers.tf

#IaC on Azure Cloud Platform | Declare Azure as the Provider

# Configure the Microsoft Azure Provider

terraform {

required_version = ">=0.12"

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~>2.0"

}

}

}

provider "azurerm" {

features {}

}

#Create the virutal network and subnets with with Terraform. network.tf

#Create Resource Groups

resource "azurerm_resource_group" "corporate-production-rg" {

name = "corporate-production-rg"

location = var.avzs[0] #Avaialability Zone 0 always marks your Primary Region.

}

#Create Virtual Networks > Create Spoke Virtual Network

resource "azurerm_virtual_network" "corporate-prod-vnet" {

name = "corporate-prod-vnet"

location = azurerm_resource_group.corporate-production-rg.location

resource_group_name = azurerm_resource_group.corporate-production-rg.name

address_space = ["10.20.0.0/16"]

tags = {

environment = "Production Network"

}

}

#Create Subnet

resource "azurerm_subnet" "business-tier-subnet" {

name = "business-tier-subnet"

resource_group_name = azurerm_resource_group.corporate-production-rg.name

virtual_network_name = azurerm_virtual_network.corporate-prod-vnet.name

address_prefixes = ["10.20.10.0/24"]

}

#Create Private Network Interfaces

resource "azurerm_network_interface" "corpnic" {

name = "corpnic-${count.index + 1}"

location = azurerm_resource_group.corporate-production-rg.location

resource_group_name = azurerm_resource_group.corporate-production-rg.name

count = 2

ip_configuration {

name = "ipconfig-${count.index + 1}"

subnet_id = azurerm_subnet.business-tier-subnet.id

private_ip_address_allocation = "Dynamic"

}

}

#Create the standard load balancer with Terraform. loadbalancer.tf

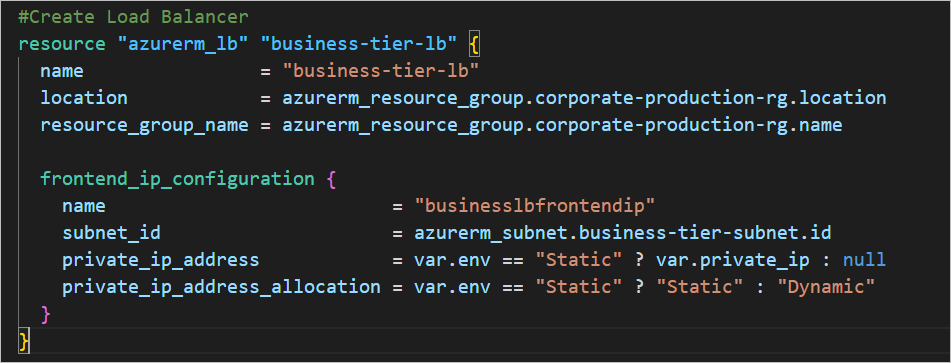

#Create Load Balancer

resource "azurerm_lb" "business-tier-lb" {

name = "business-tier-lb"

location = azurerm_resource_group.corporate-production-rg.location

resource_group_name = azurerm_resource_group.corporate-production-rg.name

frontend_ip_configuration {

name = "businesslbfrontendip"

subnet_id = azurerm_subnet.business-tier-subnet.id

private_ip_address = var.env == "Static" ? var.private_ip : null

private_ip_address_allocation = var.env == "Static" ? "Static" : "Dynamic"

}

}

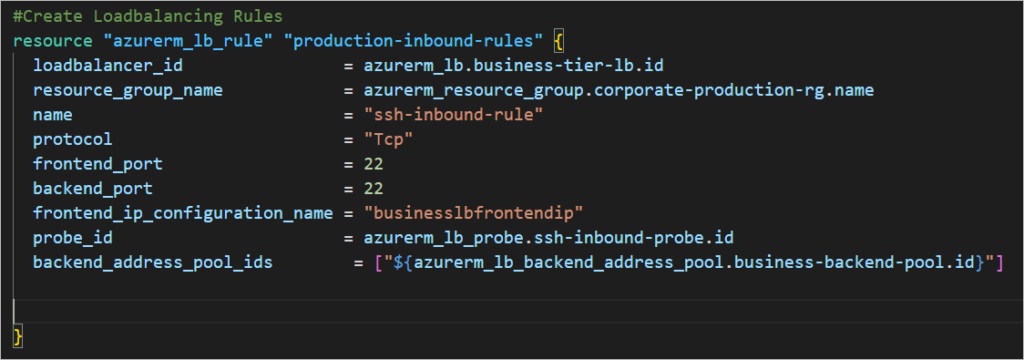

#Create Loadbalancing Rules

#Create Loadbalancing Rules

resource "azurerm_lb_rule" "production-inbound-rules" {

loadbalancer_id = azurerm_lb.business-tier-lb.id

resource_group_name = azurerm_resource_group.corporate-production-rg.name

name = "ssh-inbound-rule"

protocol = "Tcp"

frontend_port = 22

backend_port = 22

frontend_ip_configuration_name = "businesslbfrontendip"

probe_id = azurerm_lb_probe.ssh-inbound-probe.id

backend_address_pool_ids = ["${azurerm_lb_backend_address_pool.business-backend-pool.id}"]

}

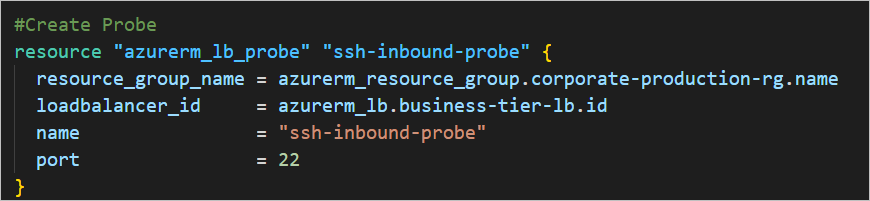

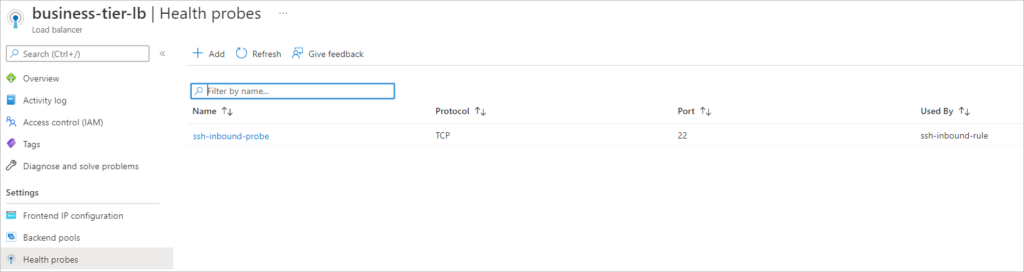

#Create Probe

#Create Probe

resource "azurerm_lb_probe" "ssh-inbound-probe" {

resource_group_name = azurerm_resource_group.corporate-production-rg.name

loadbalancer_id = azurerm_lb.business-tier-lb.id

name = "ssh-inbound-probe"

port = 22

}

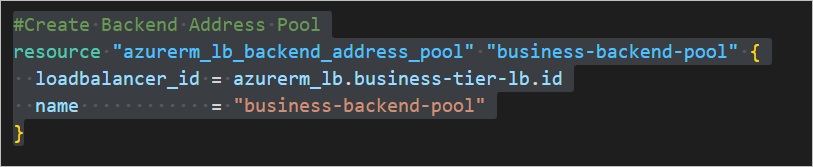

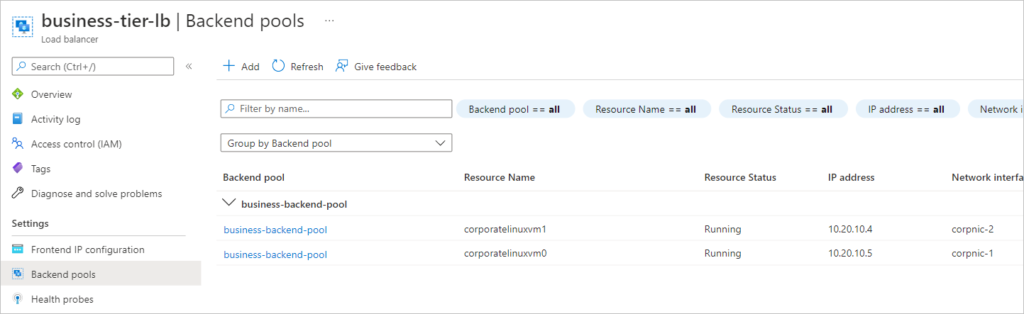

#Create Backend Address Pool

#Create Backend Address Pool

resource "azurerm_lb_backend_address_pool" "business-backend-pool" {

loadbalancer_id = azurerm_lb.business-tier-lb.id

name = "business-backend-pool"

}

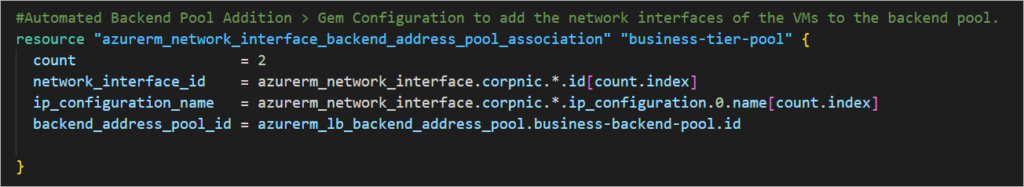

#Automated Backend Pool Addition

#Automated Backend Pool Addition > Gem Configuration to add the network interfaces of the VMs to the backend pool.

resource "azurerm_network_interface_backend_address_pool_association" "business-tier-pool" {

count = 2

network_interface_id = azurerm_network_interface.corpnic.*.id[count.index]

ip_configuration_name = azurerm_network_interface.corpnic.*.ip_configuration.0.name[count.index]

backend_address_pool_id = azurerm_lb_backend_address_pool.business-backend-pool.id

}

This line of configuration is what intelligently adds the network interfaces to the backendpool. I call it a gem because it took me quite sometime to figure it all out.

ip_configuration_name = azurerm_network_interface.corpnic.*.ip_configuration.0.name[count.index]

Create the Linux Virtual Machines virtualmachines.tf

# Create (and display) an SSH key

resource "tls_private_key" "linuxvmsshkey" {

algorithm = "RSA"

rsa_bits = 4096

}

#Custom Data Insertion Here

data "template_cloudinit_config" "webserverconfig" {

gzip = true

base64_encode = true

part {

content_type = "text/cloud-config"

content = "packages: ['nginx']"

}

}

# Create Network Security Group and rule

resource "azurerm_network_security_group" "corporate-production-nsg" {

name = "corporate-production-nsg"

location = azurerm_resource_group.corporate-production-rg.location

resource_group_name = azurerm_resource_group.corporate-production-rg.name

#Add rule for Inbound Access

security_rule {

name = "SSH"

priority = 1001

direction = "Inbound"

access = "Allow"

protocol = "Tcp"

source_port_range = "*"

destination_port_range = var.ssh_access_port # Referenced SSH Port 22 from vars.tf file.

source_address_prefix = "*"

destination_address_prefix = "*"

}

}

#Connect NSG to Subnet

resource "azurerm_subnet_network_security_group_association" "corporate-production-nsg-assoc" {

subnet_id = azurerm_subnet.business-tier-subnet.id

network_security_group_id = azurerm_network_security_group.corporate-production-nsg.id

}

#Availability Set - Fault Domains [Rack Resilience]

resource "azurerm_availability_set" "vmavset" {

name = "vmavset"

location = azurerm_resource_group.corporate-production-rg.location

resource_group_name = azurerm_resource_group.corporate-production-rg.name

platform_fault_domain_count = 2

platform_update_domain_count = 2

managed = true

tags = {

environment = "Production"

}

}

#Create Linux Virtual Machines Workloads

resource "azurerm_linux_virtual_machine" "corporate-business-linux-vm" {

name = "${var.corp}linuxvm${count.index}"

location = azurerm_resource_group.corporate-production-rg.location

resource_group_name = azurerm_resource_group.corporate-production-rg.name

availability_set_id = azurerm_availability_set.vmavset.id

network_interface_ids = ["${element(azurerm_network_interface.corpnic.*.id, count.index)}"]

size = "Standard_B1s" # "Standard_D2ads_v5" # "Standard_DC1ds_v3" "Standard_D2s_v3"

count = 2

#Create Operating System Disk

os_disk {

name = "${var.corp}disk${count.index}"

caching = "ReadWrite"

storage_account_type = "Standard_LRS" #Consider Storage Type

}

#Reference Source Image from Publisher

source_image_reference {

publisher = "Canonical" #az vm image list -p "Canonical" --output table

offer = "0001-com-ubuntu-server-focal" # az vm image list -p "Canonical" --output table

sku = "20_04-lts-gen2" #az vm image list -s "20.04-LTS" --output table

version = "latest"

}

#Create Computer Name and Specify Administrative User Credentials

computer_name = "corporate-linux-vm${count.index}"

admin_username = "linuxsvruser${count.index}"

disable_password_authentication = true

#Create SSH Key for Secured Authentication - on Windows Management Server [Putty + PrivateKey]

admin_ssh_key {

username = "linuxsvruser${count.index}"

public_key = tls_private_key.linuxvmsshkey.public_key_openssh

}

#Deploy Custom Data on Hosts

custom_data = data.template_cloudinit_config.webserverconfig.rendered

}

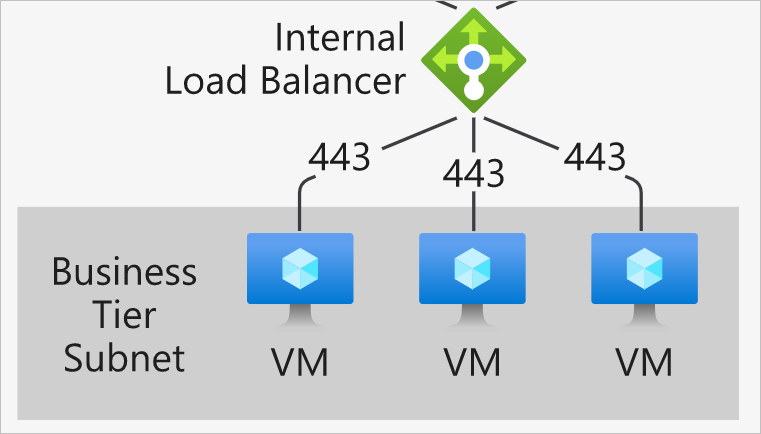

If you are interested in using the UI to create a solution as above, then follow Microsoft’s Get started with Azure Load Balancer by using the Azure portal to create an internal load balancer and two virtual machines.